Introduction

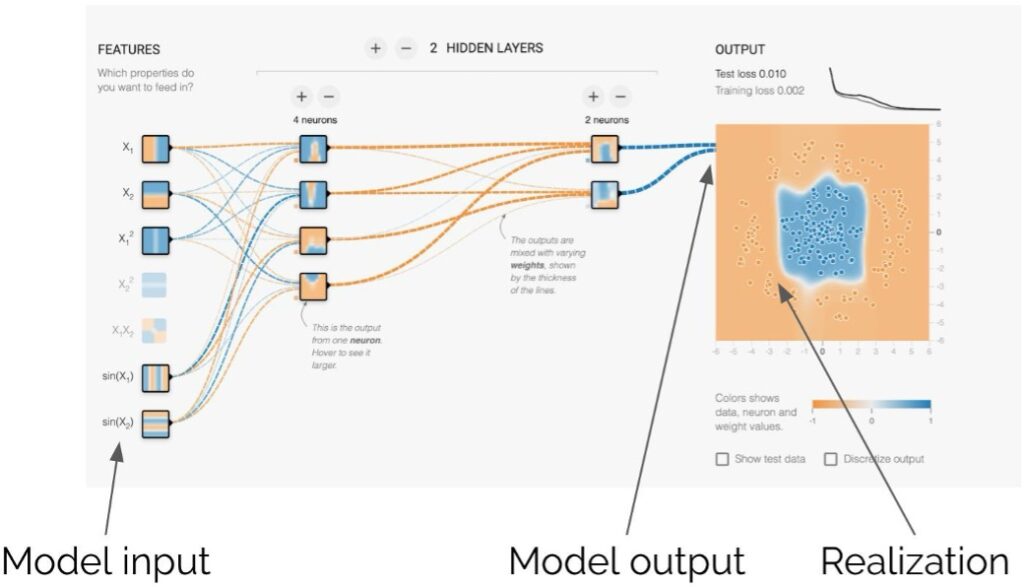

Model risk management (MRM) is the art of handling the inherent uncertainty related to mathematical modeling. We create algorithms for many different reasons. In the past, most models were built to study the evolution of dynamical systems (e.g. a credit risk model or a valuation model). Models were often created via a first-principles approach with analytical tractability in mind. Nowadays, ML models are everywhere, impacting both our individual behavior and changing the dynamics of entire societies. With such a persistent use of models, understanding the risks involved becomes mandatory since the consequences of model failure can be massive.

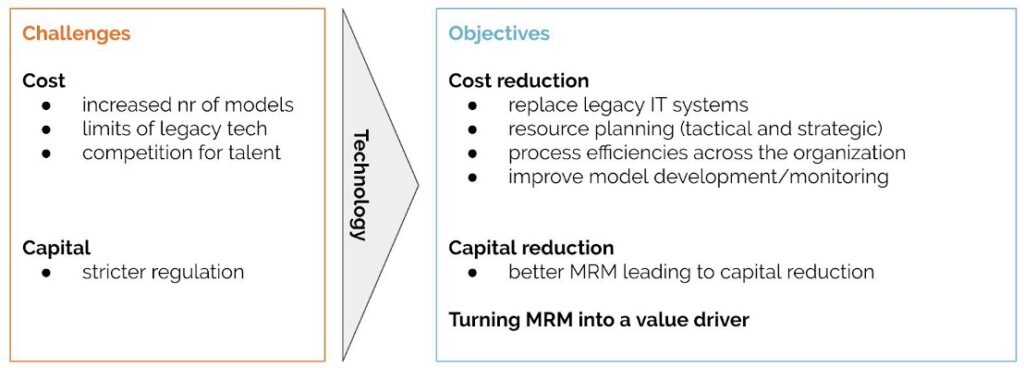

This is why there is a continuously growing pressure from governments and regulators to increase requirements for MRM and improve AI governance. Because of this evolution, financial organizations are looking at technology to address these challenges. In the current white paper we expand on this topic.

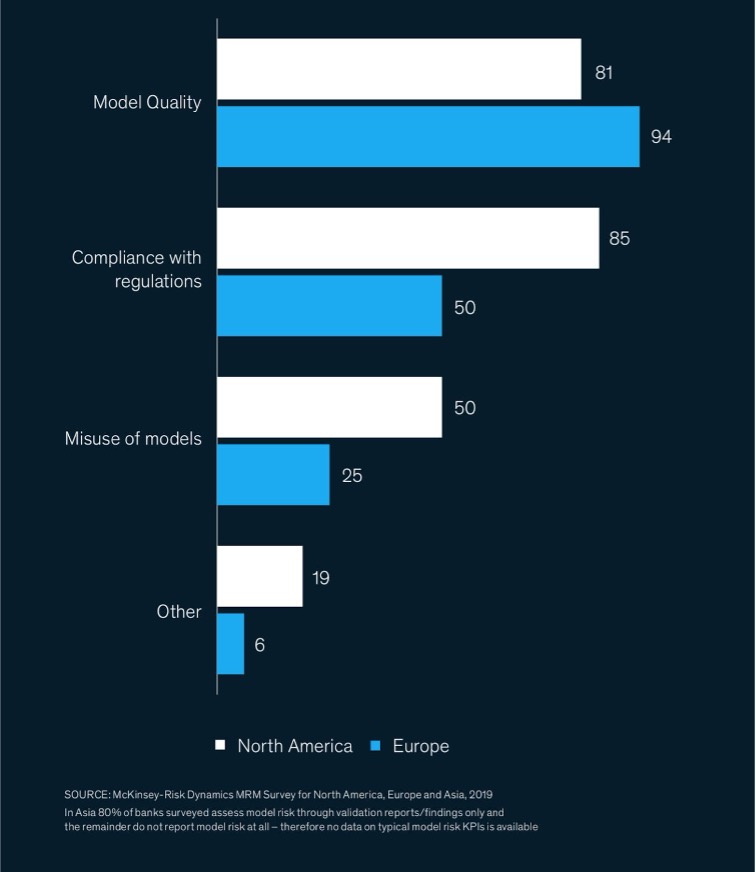

Although model risk tiering and quantification of model risk and uncertainty are very powerful concepts, the actual definition is not straightforward. This is because the concept of model tier is a

multi-dimensional quantity, meaning that we cannot summarize the risk in a single number, much different from e.g. market risk. For model risk, the three main components are:

- Model quality: this includes data quality, data stability, model performance, difference with alternative approaches etc

- Compliance with regulations: This in itself is a challenging topic because the regulatory impact in itself is a relative concept. Indeed, for a large global institution, the risk associated

- Misuse of models: One of the key questions related to model risk is to verify if a model is fit for purpose. This is however not a static judgement because both data and use of the model can gradually change over time. Properly defining this and measuring the risk for misuse is a challenge common to many institutions.

to a particular model that is used in a small country (such as Belgium) should be minimal, for the local supervisor, this model could be one of the most critical ones that are available in its remit

1 https://www.mckinsey.com/business-functions/risk/our-insights/the-evolution-of-model-risk-man agement

2 https://www2.deloitte.com/content/dam/Deloitte/dk/Documents/financial-services/deloitte-nl-g lobal-model-practice-survey.pdf

More than regulatory validation

Customers are gradually becoming more sensitive with respect to the impact of algorithms on their daily lives. This is of course driven by the multitude of events that have happened recently related to the discovery of bias or unfairness in AI.3 Deploying a model that develops this type of issue is therefore a large reputational risk.

When dealing with these issues, the most important task is to create sufficient governance around it which implies a.o. that the various types of potential model issues are exhaustively listed, measured and -if necessary- remediated. In the context of AI, the main points of attention are:

- Bias: meaning that the decisions of the algorithm show a systematic trend based on a particular feature of a client that is deemed incorrect. This type of issue often appears when the data is unbalanced. Minorities are -by construction- underrepresented in the datasets that are used for training, and as a consequence the algorithm will generalize on those data points, which can lead to unwanted outcomes

- Unfairness: meaning that the decisions depend on a so-called protected feature which is an input on which we do not want the model to be sensitive. Examples of protected features can be race, gender, mother tongue, etc. The challenge here is to define clearly what behavior the algorithm is expected to display. Demographic parity (i.e. enforcing the same result for the various values of a protected feature) is not always considered fair either.

- Explainability: When algorithms are used to make impactful decisions, it is important to be able to explain the decision itself. This requires at least an understanding of what were the driving factors leading to this particular result but does not necessarily require algorithms to be overly simple. A famous example of such local explainability technique is LIME. 4

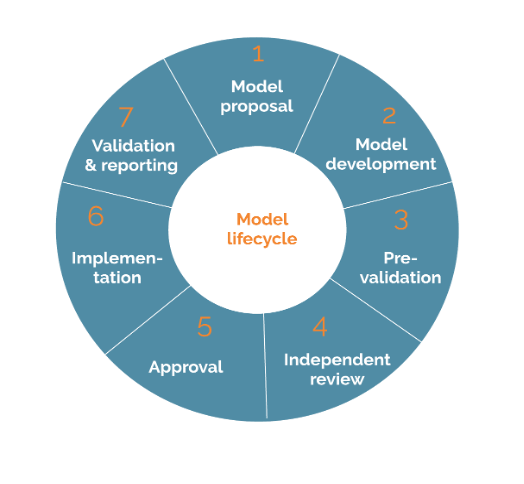

Process related data

MRM means managing the model lifecycle. This implies that various teams (model developers, model validators, auditors, risk committees, …) have to collaborate to move through the lifecycle efficiently. A good MRM system therefore should capture data related to this process such as

- The amount of time spent per model per step in the life cycle

- The number of iterations and communications between different teams

- Quality measures of the documents that have been created (e.g. available sections, quality of written text, etc.)

Access to these datasets will allow managers to better plan resource allocation to meet internal and regulatory deadlines. Operational leaders will in addition be able to identify bottlenecks and streamline existing processes.

Similarly, MRM departments should gather meta-data on the available datasets. This should include the growth rate of the data, the number of modifications/corrections applied on each dataset, the number of structural changes of the data, etc. Having access to process related data will allow a financial institution to streamline its MRM processes and improve its resource allocation strategy.

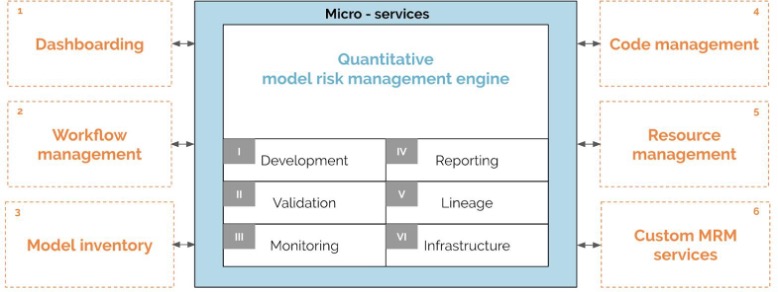

Based on the above, it should be clear that a good MRM system should have as a first key requirement the ability to collect, store and expose all data. This functionality needs to be implemented with an extensive authentication and authorization protocol to enable sharing of information while guaranteeing independence between teams.

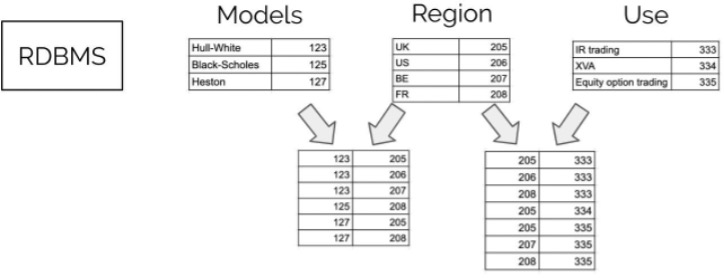

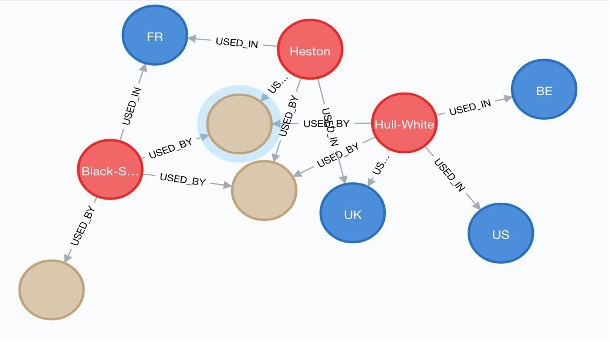

A graph based model inventory is consistent with our two key technology requirements. It is first of all data centric, since it can naturally represent the inventory together with its dependencies (data feeding into the model, performance data, etc).

Moreover, contemporary graph databases are easy to integrate with and as such are a good example of modularity.

Executing business processes

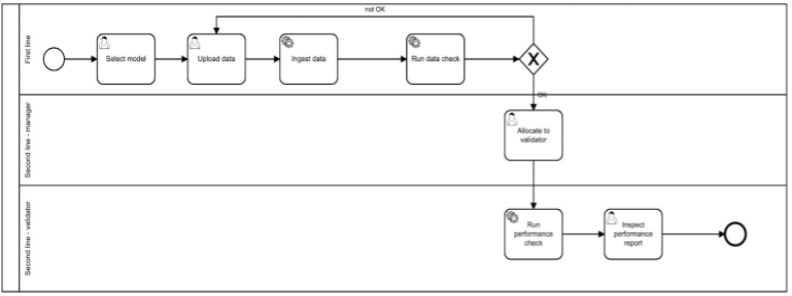

As a second example, we would like to illustrate how to structure business processes. A systematic way to represent such process is to use the so-called BPMN7 (business process model and notation) 2.0 representation. This is a graphical language that can represent in detail all model risk management processes, indicating as well who/what team has to execute a task, which tasks are automated, what is the decision process, etc.

On top of this, once a BPMN process has been designed, it can be executed by a workflow engine. Since this is a standard, many open source solutions exist such as Camunda,8 Activiti,9 Flowable10 and many more.

Once a workflow process is deployed it can be executed, which means that the process will be run in a reproducible fashion, with all data being stored systematically. By doing so, an ad-hoc collaboration via email between different teams is transformed into a string of tasks that are executed in a systematic and reproducible fashion.

As an example, let’s look at a process to request for independent model review (IMR). The BPMN diagram is shown below.

If a modeler is ready to ask for an IMR, she initiates a process. In a first step, she specifies the model that needs to be validated, and then submits documentation and data. After this manual task, an automated process is started to verify the quality and completeness of the submitted data. If issues are discovered, the modeler has to resubmit an improved dataset, if the data on the other hand is clean, the process continues.

At this moment, a task is assigned to the manager of the validation team to choose the validator that is going to perform this particular model validation exercise. Once done, a set of initial tests are automatically executed and after that the task arrives at the inbox of the validator.

By executing such a process, we put the responsibility of providing clean data entirely in the hands of the model developer, which is a huge efficiency gain for the validator. In addition, we start gathering data on the entire process which allows for further efficiency improvements further down the line.

This approach is again compatible with our two key requirements on technology: the setup is modular as the BPMN process engine is a separate entity from the quantitative validation engine and it is data-centric as we are storing all the meta-data related to process execution.

Conclusions

MRM is going through an industrialization phase. This transition necessitates the introduction of new technology to empower model risk managers to turn model risk management into a value driver. By investing in technology now, institutions will be able to capture the added value of advanced analytics in a sustainable fashion.

About the author

Jos Gheerardyn has built the first FinTech platform that uses AI for real-time model testing and validation on an enterprise-wide scale. A zealous proponent of model risk governance & strategy, Jos is on a mission to empower quants, risk managers and model validators with smarter tools to turn model risk into a business driver. Prior to his current role, he has been active in quantitative finance both as a manager and as an analyst.