Artificial Intelligence has become an increasingly integral part of today’s software applications. Its capabilities in predictive analytics, personalisation, decision-making, and automation are unlocking unprecedented value across multiple industries. However, alongside this rapid adoption of AI applications, organisations are grappling with new and unique challenges in ensuring the security of these systems.

Traditional IT security policies and procedures are no longer entirely sufficient. They must be enriched to tackle the unique security demands that AI applications present. In the current blog post, we will explore why this extension is necessary, and how model risk management (MRM) principles can be leveraged to introduce additional controls and procedures.

Why Extend Traditional IT Security for AI Applications?

The crucial distinction between traditional software and AI-driven applications lies in the fact that the latter are based on algorithms that are trained on data while the former follow explicitly programmed instructions. This key difference results in unique security challenges:

Data Dependence and Privacy

AI applications require vast amounts of data for training. This data could include sensitive or personally identifiable information, presenting specific data security and privacy challenges. Traditional software can of course also be used to process sensitive information. However, the amount of data for AI is typically much larger while for the development of traditional software typically samples suffice.

Opaque Decision-Making

Given the complexity of many machine learning algorithms, the actual decision making process may depend on millions of parameters which makes it intractable; this is the so-called black box problem. This opacity makes understanding, predicting, and especially controlling the behaviour more difficult. Conversely, traditional software is rule-based, making it easier to debug and understand.

Adversarial Attacks

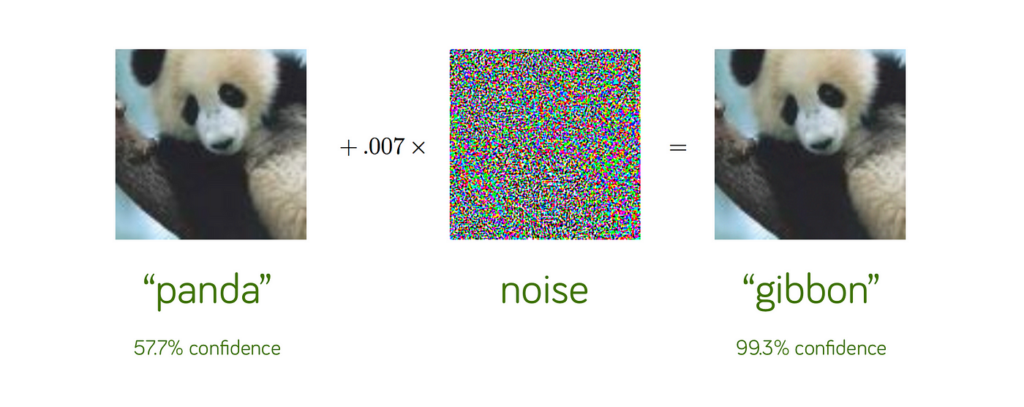

One consequence of the complexity of AI models is that they can be susceptible to unique security threats like adversarial attacks, where the model is tricked into making incorrect predictions or decisions caused by a minor -but highly tuned- change in the inputs that is often undetectable by a human. Such adversarial attacks can be used to exploit the application and control it.

Source: Explaining and Harnessing Adversarial Examples, Goodfellow et al, ICLR 2015

Dynamic Nature

Even if the use case does not change, many AI models continue to learn and evolve after deployment. This dynamic nature can lead to unpredictable changes in behaviour over time that is again difficult to control.

Leveraging MRM Controls and Procedures

Given these unique challenges, traditional IT security needs to be supplemented with AI-specific controls. The security challenges listed above are all related to the fact that these applications contain complex algorithms.

Model risk is the risk that an organisation takes when using mathematical models to make decisions. If these models do not function correctly, or if they are used outside of the context for which they have been developed, they might fail. Model risk management is the practice of mitigating this risk. Especially in the financial industry, this branch of risk management is well established and moreover regulated.

We therefore highlight a few aspects of MRM that can be used directly to control the security challenges mentioned earlier.

- Model Validation and Testing: AI applications require rigorous developmental testing and independent validation. Ideally both activities are managed by different teams as is often implemented using the so-called three lines of defence principle. The tests that are executed by the developers and reviewed by the validators should include understanding the dataset used, mitigating biases, quantifying performance, identifying limitations, and ensuring robustness. This will help to strengthen the application against malicious attacks.

- Transparency and Explainability: There should be at least an agreed method to audit the decisions of an AI application. Even if it might be near to impossible to understand the global behaviour of the algorithm, tools should be identified and made available to inspect a particular prediction, understand the driving factors and build up intuition on the sensitivity of the decision with respect to the inputs. This can be achieved by leveraging so-called local explainability techniques such as Shapley values.

- Model Monitoring and Maintenance: Ongoing monitoring is crucial to ensure continued accuracy, reliability, and protection against threats like model drift or adversarial attacks. When KPIs change too much during monitoring and when maintenance operations on the AI application have a material impact on the underlying algorithm, the control procedures should describe how the AI application should be re-trained and/or re-validated.

In addition, the overall governance of the application should be enhanced. This includes at least the following:

- Data Governance: Security controls must protect the large volumes of data used by AI, complying with relevant data protection and privacy laws. This is especially relevant because AI algorithms might memorise the data on which they have been trained.

- AI Ethics and Compliance: An AI ethics policy is required to ensure fairness, transparency, and accountability in AI decisions that impact individuals.

- AI-specific Incident Response Plan: Given the complexity of AI systems, a specific incident response plan for potential AI-related incidents is crucial.

- Training and Awareness Programs: Unique threats posed by AI require adequate training for IT staff, data scientists, and end-users.

- Third-Party Risk Management: The third-party risk management practices for traditional software vendors has to be extended when procuring AI services from third parties. Most importantly, it should be verified that similar enhancements as described here are added to the regular secure software development practices as followed by the vendor. See e.g. section 8 of the ECB guide to internal models.

Conclusion

In conclusion, as AI continues to transform our software landscape, augmenting traditional IT security controls with AI-specific ones becomes not just important, but essential. An updated, comprehensive security policy that caters to the unique demands of AI applications is the key to unlocking AI’s potential in a secure and responsible fashion. This proactive approach ensures not just the protection of data and systems, but also the trust of stakeholders and users in this rapidly evolving technology landscape.