The three lines of defence is the model that financial institutions, particularly (more established) banks, apply to deliver effective model risk management.

Before elaborating further on the three lines, it is important to understand that model risk management is not a sequential process. The process starting from the development of a model and ending in the production of the model, requires multiple back and forth interactions between different stakeholders involved in model risk management. These stakeholders make up the so-called three lines of defence, they are divided according to their roles and responsibilities.

Throughout multiple iterations between these three lines of defence, an organization can assure the quality of models in production. Moreover, this can as well reduce the risk of model failure.

By clarifying roles and responsibilities, the three lines of defence enhances the understanding of governance and risk management. Information is centrally managed and shared, and the activities are coordinated to avoid duplication of efforts and gaps in coverage.

The main driver for the creation of this concept is the regulatory guidance SR11-7 that states the following: “While there are several ways in which banks can assign the responsibilities associated with the roles in model risk management, it is important that reporting lines and incentives be clear, with potential conflicts of interest identified and addressed.”

Although SR 11-7 uniquely refers to banks throughout the document, it is important to notice that other financial institutions have adopted the guidance for their institutions even though they may not have all three lines of defence. For instance, model risk audit is not always there and some model risk activities responsibilities may be outsourced.

Organization of the three lines of defence

The first line of defence is composed of model developers, model owners, model users and model landscape architects. The second line of defence is the team of model validators and model governance. While the third line of defence is constituted by the internal auditors.

To better comprehend the three lines of defence, it is necessary to have a good understanding of a typical model lifecycle. This consists of seven stages: model proposal, model development, pre-validation, independent review, approval, implementation and validation, and reporting.

The First Line of Defence

Model developers, model owners, model users and model landscape architects form the first line of defence.

Model owners propose a model idea based on the business requirements. The model is identified and classified based on its expected materiality (e.g. business impact) and complexity.

Model developers are responsible for building the models and making sure that the models have been tested exhaustively before going into production.

Model users should provide feedback on how the model is serving the purpose. For instance, if the model user is systematically overriding the output of the model, this is a signal that the model might not be fit-for-purpose and its maintenance costs are not justified.

Model landscape architects are a recent role in model risk management. They have a strategic perspective over the model design and maintenance of the model landscape. Model architects consider all requirements and perspectives of different model uses of specific model types in the model landscape in order to design and maintain an optimal model landscape.

In addition, the first line of defence needs to generate model documentation, which provides a detailed description of the scope of the application of the model, the model assumptions, the data sources, tests and the process flow. For example, in a Black-Scholes model used for pricing and risk management of at expiry exercise payoffs on single equity underlying for the scope, the model is approved for a settlement delay of up to 2 weeks and has a final maturity that is less than 5 years.

Moreover, they need to assure that the underlying theory of all mathematical models is valid and correct. Data that is being used in the model development process needs to be gathered, understood and cleaned. The first line is responsible as well for providing a model implementation once the theory has been described. This has to be tested and proven to work correctly, according to the underlying theory.

Once model implementation is ready, the model needs to be described in multiple documents for a given model. These serve as a starting point for the team of validators. For instance, for a valuation model, we may have a specification document where mathematical details and equations are described, an implementation document explaining how to obtain the parameters and the numerical methods used, and a user guide related to the product usage. If the model has been validated successfully, it can be deployed into production.

Model maintenance of these models in production is typically the first line of defence’s responsibility. Model monitoring is an activity which is mostly/typically performed by the first line of defence, but the second line of defence is often very much involved in the process, for instance by independently assessing the results. As a model may need to be improved/enhanced because of its deteriorated performance or to address current deficiencies/limitations, it is critical to continuously keep track of the model quality evolution over time.

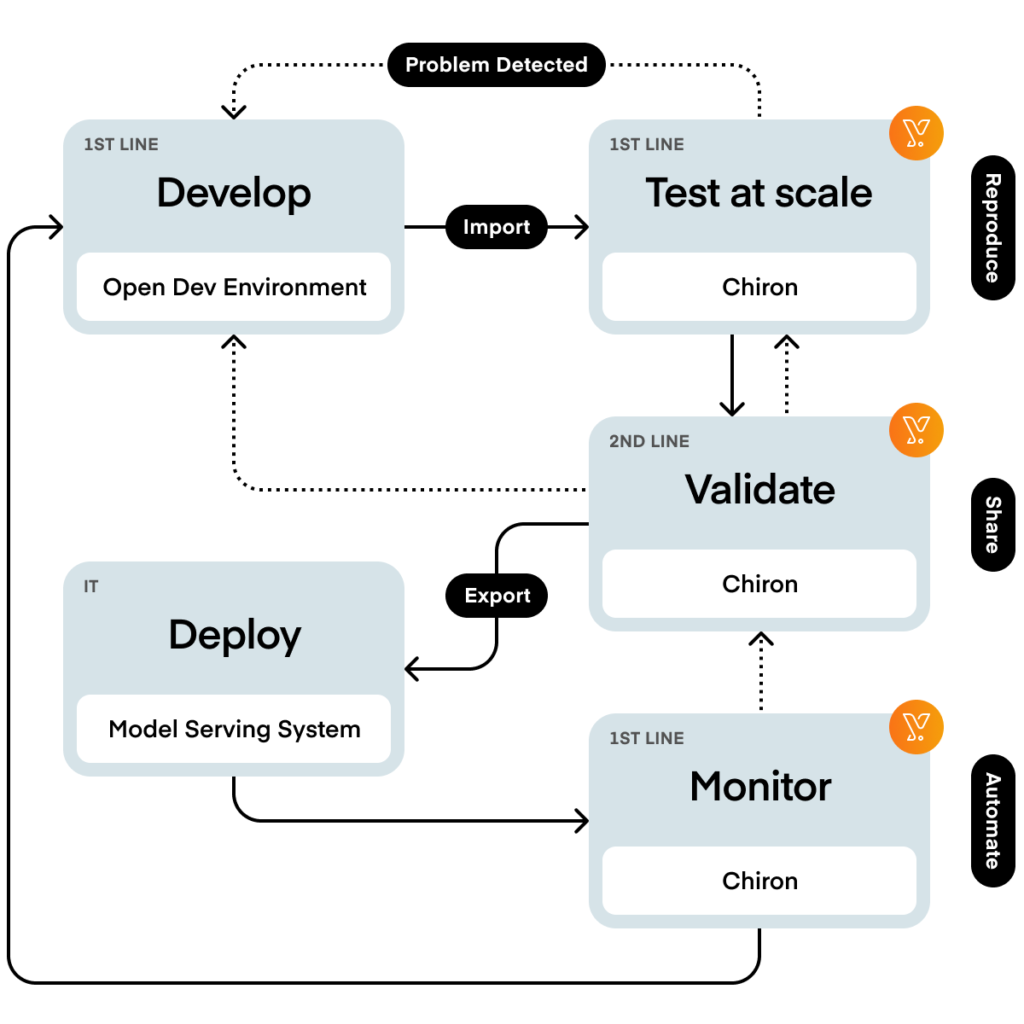

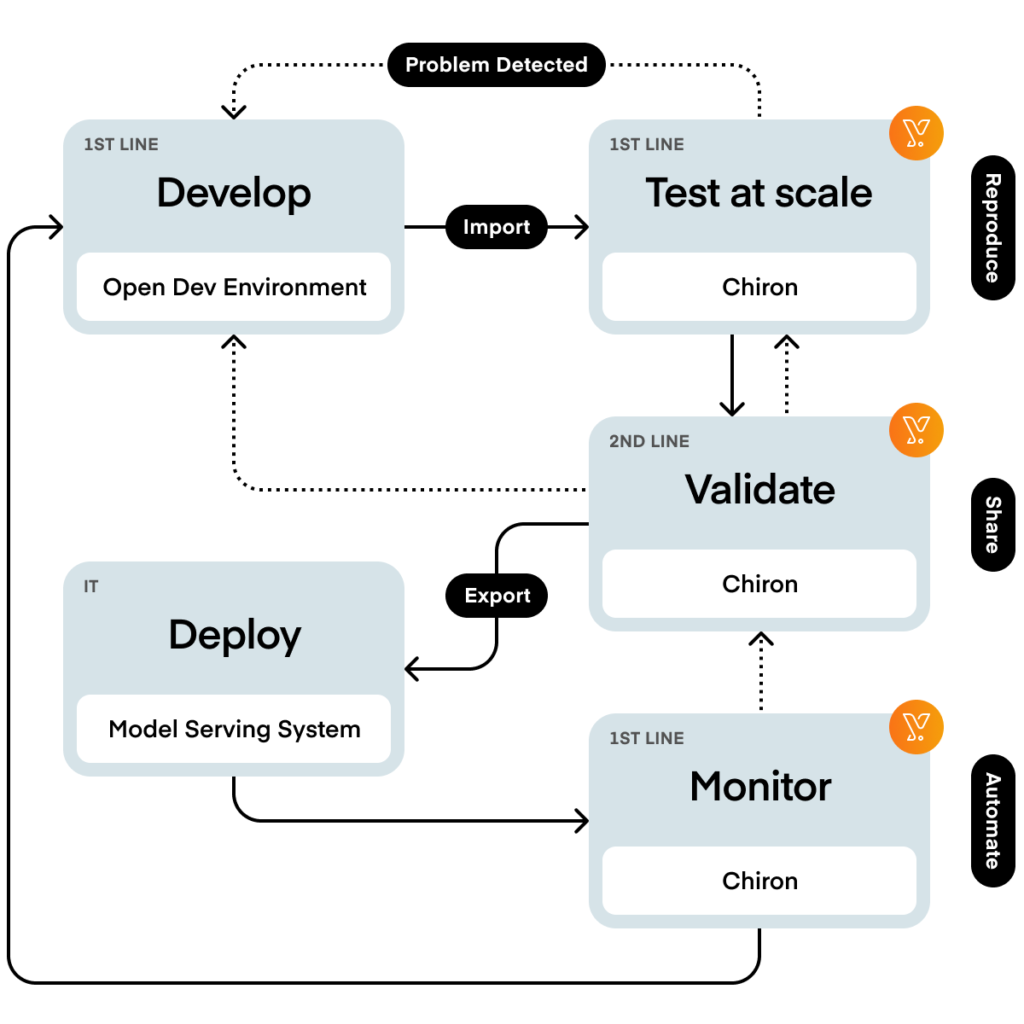

It is advised that the production environment and the technology that monitors your models are separated from each other to create a healthy level of independence.

How can technology improve the first line of defence efficiency?

There are a few techniques to automate model development activities and contribute to an efficient process.

One of the key services of Chiron, Yields’ data science platform, entails the automation of repetitive analyses. Examples of repetitive analyses are backtesting, monitoring the impact of stochastic interest rates via a benchmarking model; for example, the Black-Scholes Hull-White model and data quality assessments. Through the automation of repetitive tasks, model risk managers can focus on their SME tasks.

Another option to automate repetitive tasks is the use of AutoML techniques to automatically create benchmarks. Moreover, with AutoML it is possible to leverage certain techniques in autoencoders that automatically detect anomalies and assess data quality.

Through Chiron, model risk managers can create reproducible testing reports and monitor model quality evolution over time, thanks to the integration between Chiron and Jupyter notebooks. These provide useful tools to create interactive documentation which describes how the models are performing and their theoretical foundations.

A financial institution that uses Chiron makes a strategic commitment to own the validation process. Moreover, financial institutions can generate model validation documents’ compliant with regulatory frameworks such as SR 11-7 and PS 7/18.

Second Line of Defence

Model validation and model governance, the second line of defence, both have a reporting line to the head of model risk management.

Model validation independently reviews the documentation previously produced by the team of developers, as well as verify and test the higher tier models. This is done to guarantee that the first line is working according to the specifications. Moreover, this team usually produces a document with findings based on their review.

Model governance assures that the models are in compliance with model risk policies and procedures from the initiation phase -when the models are identified and added to the model inventory – until the end of the model lifecycle, which is also known as the retirement of the model. Model governance advises the first line of defence on how to comply with the model risk policies and procedures, gives one-off approvals for deals to be traded, and supervises the process of obtaining waivers for temporary model usage. Moreover, they also might perform model validation or model review for lower-tier models.

As per our previous Black-Scholes model example, a one-off approval may be granted for a trade with a maturity date of 6 years.

Reviewing is a critical component of the model validation process. Ideally, there is an interactive discussion between the first and the second lines of defence which allows the second line of defence to perform a critical assessment of the choices made by the first line of defence. For instance, the type of data used, the process defined for the model development, model assumptions, methodological choices, and subsequent limitations.

Furthermore, the model validation team needs to be independent. This means they should have separate reporting lines as well as sufficient knowledge, skills, and expertise in building and validating models. Likewise, the second line of defence should have explicit authority to challenge the first line of defence. For instance, in case there is a need to stop models from going into production.

Once models are running in production, the periodic review takes place, and this happens with frequency commensurate with the model tier as models with higher tiers have more frequent reviews.

Model Validation Framework

Model validation is usually organised through a model validation framework. According to SR 11-7, there are three main elements of this framework.

The first element states that the model needs to be assessed for conceptual soundness. In fact, the model should be specified so that it is able to capture the main features that characterize the product in scope. For example, implied skew from Equity Options.

The second main element is ongoing monitoring. During the model lifetime, model validators have to assess the model in case any known model limitations happen to be violated. Besides, they need to check the results of previous validations and the different types of tests previously performed during model development. In the Black-Scholes model example above, the Black-Scholes Hull-White benchmark model could be used to assess the impact of stochastic interest rates.

A model validator should check that the code was not modified or that the system integration still works as expected. This is fundamental for the well-being of the model.

Lastly, as part of the ongoing monitoring, the first and second line of defence should check for overrides. When performing manual overrides, both lines of defence will be able to indicate issues in case the model does not behave as expected.

The third element of the model validation framework is the outcome analysis. Here, among other things, the model validator performs sensitivity analysis to assess the stability of the model output to changes in the inputs. For instance, changes in the call/put price for changes in the input implied volatility surface.

As previously mentioned, with Chiron, model risk managers can automate repetitive analyses, such as backtesting, benchmarking or data quality assessments. This reduces the time to market of bringing new model risk management technology into production.

Chiron, our data science platform, speeds up the model lifecycle process allowing model risk managers to deploy new models 10x faster. Furthermore, it increases the efficiency of model risk management through automation. Thanks to this, model risk managers can register cost reductions of up to 80%.

Third Line of Defence

Internal auditors represent the third line of defence. Their responsibility is to confirm whether the process between the first and second line of defence works efficiently and to capture model deficiencies by checking if model risk policies and procedures have been executed as expected and in compliance with regulatory guidance.

Usually, auditors perform a model risk audit by performing a deep dive on a particular line of defence or type of models. Mostly, these analyses are focused on the high tier models and/or on the ones used for regulatory exercise, for instance, CCAR or ICAAP models.

Capturing model deficiencies typically is a very time-constrained task. One of the key challenges is to identify a process that is prone to inefficiencies, such as an atypical model validation document.

How can machine learning improve the third line of defence efficiency?

A way to use machine learning to automate the identification of inefficiencies in the process is by having a repository for all validation reports. Usually, each financial institution has a model risk management framework that governs how validation reports are generated. This means that the structure of most validation reports should be similar.

This is helpful to build a feature extraction algorithm. It identifies the different sections and properties of a validation document as this process can be fully automated.

The result of a feature extraction algorithm will be a vector. Auditors can train an outlier detection algorithm on these vectors, which is going to identify atypical points in the dataset. Such outliers can represent a validation report that could be very different from the ones previously created. For instance by having missing sections, by being longer than the other validation reports, or by having other problematic features.

Conclusion

The three lines of defence build in structure and governance to address the increasing dependency of financial institutions on models to make informed decisions. The delimitation of three lines of defence in model risk management guarantees that high-quality models are put in production. Moreover, it is a strong foundation for financial institutions to meet the increasingly stringent regulatory expectations and assures that the risk of model failure is reduced.

| The Three Lines of Defence | Roles |

| First Line of Defence (Model Developers, Model Owners, Model Users and Model Landscape Architects) | – Model Development – Data Cleaning – Model Documentation – Model Implementation and Testing |

| First and Second Line of Defence | – Model Monitoring |

| Second Line of Defence (Model Validation and Model Governance) | – Control and Support the First Line of Defence – Model Review – Model Validation – (Independent) Model Testing |

| Third Line of Defence (Internal Audit) | Confirm the efficient process between the First and Second Line of Defence in accordance with policies and procedures |

Effective implementation of the three lines of defence requires coordination and intense collaboration between the different stakeholders involved. Gaps in coverage or duplication of efforts should be avoided.

Through the centralization of all data, analytics and reports, Chiron, enables efficient and intense collaboration between the different lines of defence. With Chiron, Yields’ award-winning data science platform, you can streamline your model risk management, create reproducible model documentation, and automate testing.