We would like to start this post by defining “What is bias in machine learning?”

“Bias” is the “inclination or prejudice for/or against one person or group, especially in a way to be considered unfair”. In the context of algorithms, there are especially two aspects that require our attention:

- Bias in algorithms is often driven by the data on which the algorithm is trained

- Measuring something to be unfair requires quantification in order to address this problem in machine learning

Let us set the stage by introducing two examples:

In a first example, we will assume that we build an algorithm to predict the probability of default. There is one client segment including 1000 clients of which only 10 defaulted. A very naive algorithm could predict that the probability of default for this particular segment is exactly 0%. In 99.9% of all cases, this prediction is clearly correct. However, given the fact that this data is highly unbalanced, this algorithm systematically underestimates the risk and therefore is biased.

Building further on top of the first example, let’s suppose that our institution starts catering to a new client segment (e.g. people with a different nationality). In the early phases, we do not have a lot of data corresponding to that new segment. Suppose now that out of this new client segment, 5% has defaulted so far. A naive regression model might conclude – based on that data – that all people with that new nationality have a much higher default rate which might be an equally bold and wrong extrapolation.

This last example is more intricate because (1) it is not always straightforward to correctly identify causality and the most precise way of extrapolation, and (2) to formally quantify bias in a way that is acceptable to all parties.

Because of those subtleties, the cases of bias in machine learning algorithms are plentiful.

An often-cited example is from Amazon, as the company had created an AI platform to recruit new developers. The algorithm developed gender bias because historically, most developers were men, and the training dataset was therefore highly imbalanced. As a consequence, the AI incorrectly inferred that being a woman meant that the person would not be fit for a developer job.

Another similar but more recent example was reported when the Apple credit card limits suddenly showed gender bias since married couples discovered that the husband’s limit was 20 times higher when compared to his wife’s.

Common types of bias in machine learning algorithms

In machine learning, we have different types of bias which can be organized in many different ways. We stick to the ones from Wikipedia:

- Pre-existing bias in algorithms is a consequence of underlying social and institutional ideologies, which can have an impact on the designers or programmers of the software – human bias in machine learning. These preconceptions can be explicit and conscious, or implicit and unconscious. In general, bias can appear in algorithms through both the modeling approach but also through the use of poor data, or data from a biased source.

- Technical bias arises throughout limitations of a program, computational power, its design, or other constraint on the system. As one example, technical bias can appear if we oversimplify a particular model because of constraints around e.g. response times in the application.

- Emergent bias is the result of the use and reliance on algorithms throughout new or unexpected contexts. Typical examples occur where the training data is not representative of the data on which an algorithm is used.

Measuring bias in machine learning algorithms?

When building models, we need precise definitions of fairness in order to systematically fix bias. To illustrate this, we will analyse a concrete example from Belgium, the home-country of Yields.io. Belgium is rather small but we have a rather intricate institutional system, caused by the fact that there are three official languages: Dutch, French and German. The biggest communities speak Dutch and French, so we will focus on these two, to simplify the example. The northern part of the country is primarily Dutch-speaking and the southern part is French-speaking.

Let’s assume now that we are developing credit decision models for Belgium. Given the sensitive nature of languages, we want to make sure that the results of the credit decision model “do not depend on the language that is spoken by the person who is applying for the credit”. Stated otherwise, we assume that language is a protected feature.

First of all, we could introduce “unawareness”, which means that we would remove the language attribute from the dataset before training. By removing the attribute we hope that the model will not develop sensitivity against the language attribute. However, because of spurious correlation, it might be possible to reverse engineer the value of the protected attribute.

In the case of Belgium, you may predict the language accurately by simply looking up the person’s address and determining if the person lives in the northern or southern half of the country. With this example, we can conclude that unawareness is typically not a good guard against bias.

A second approach is “demography parity”. For this, you require the output of the models to be the same, regardless of the value of the protected attribute. In our credit risk example, this would mean that the credit score of a client will be the same irrespective of the language spoken.

In some cases, this is not considered fair either. If e.g. on average, the northern part of Belgium would have a higher probability of default compared to the southern part because Dutch speakers would be less risk averse, this approach would imply that the French community would have to pay more because of the risk appetite of the North. This again is often considered unfair.

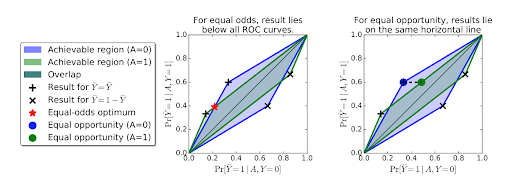

This is the reason why, very often, the “equalized odds” is chosen. “Equalized odds is a statistical notion of fairness in machine learning that ensures that classification algorithms do not discriminate against protected groups.” Informally stated, equalized odds implies that the forecasting error is independent of the protected attribute.

How to handle bias in machine learning algorithms using equalized odds in practice?

We could now define a simple procedure to solve bias in ML models.

- Determine the protected attributes of the dataset

- Train the model on the full dataset

- With the mathematical definition of “equalized odds”, you are able to measure the bias

- Correct the model output algorithmically, if needed, after the model has been trained

This systematic approach will allow you to quantify the degree of bias and you will be able to correct it.