At Yields, our mission is to “empower organizations to trust their algorithms and lead them to responsible AI-based decision making”.

Model risk management of AI is an important global topic. As financial, recruiting, healthcare, justice and governmental institutions are introducing AI solutions, regulators are creating new standards for model risk management of AI applications. The Assessment List of Trustworthy AI (ALTAI) is proof of that.

Trustworthy AI with ALTAI

On July 17, 2020, ALTAI was presented by the European Commission (EC). The ethics guidelines introduced the concept of Trustworthy AI framework, which is based on qualitative and quantitative assessments:

1. human agency and oversight

2. technical robustness and safety

3. privacy and data governance

4. transparency

5. diversity, non-discrimination and fairness

6. environmental and societal well-being

7. accountability

The ALTAI is grounded in the protection of people’s fundamental rights. To efficiently manage the model risk of machine learning/AI, companies have to modify their existing processes and move towards technology-assisted validation.

Indeed, because of artificial intelligence, the datasets become much larger which requires powerful tools to assess, for instance, data quality and data stability. Besides this, machine learning models offer specific challenges such as bias, explainability issues or security threats such as adversarial attacks, which can only be addressed by using other algorithmic techniques and scalable computation infrastructures.

ALTAI does not offer specific techniques to measure the various issues with AI/ML models. However, in order to manage the risk related to such algorithms, we need a reproducible and objective way to measure the risks involved.

In this article, we will therefore focus on how to turn certain aspects of ALTAI into quantitative assessments. Such measures are already implemented in Chiron, the Yields award-winning platform, an enterprise solution for model risk management.

Requirement 1: Human Agency and Oversight

In order to allow for efficient human oversight, technology is needed to monitor the behaviour of the algorithms. Whenever atypical data or performance is detected, humans need to be warned to review.

In that respect, the so-called emerging topic of MLOps is directly relevant. MLOps is a mixture between machine learning and operation. Moreover, it is a practice for collaboration and communication between data scientists and operation teams that helps manage the production ML lifecycle.

Chiron drives efficiency, leading to cost reductions of up to 70%. Efficiency is realised in two dimensions: First of all, clients can apply the same validation/testing approach homogeneously across all models of a given model type. Secondly, repetitive analyses (such as monitoring tasks) can be continuously run. This provides a scalable approach to monitoring ML applications such as real-time fraud detection, algorithmic trading or intraday derivatives pricing to name a few.

Requirement 2: Technical robustness and safety

A fundamental requirement for accomplishing trustworthy AI systems is their dependability and resilience. Technical robustness demands that AI systems are developed with a precautionary approach to risks. Moreover, it requires that AI systems behave reliably and as intended while minimizing unintentional and unexpected harm, as well as preventing it where possible.

The questions in this section address four main issues: security, safety, accuracy and reliability, fall-back plans and reproducibility.

One of the enterprises’ biggest concerns is “how exposed is the AI system to cyber-attacks?”

Data poisoning

Data poisoning is one of the attacks, made by an adversarial actor, that can affect an AI system. This happens when an adversarial actor is able to inject bad data into the AI model’s training set, making the AI system learn something that it should not be learning.

To prevent data poisoning, you must check the robustness of your model performance relative to data quality. Within Chiron you can monitor model quality in real-time as well as integrate these services with your enterprise architecture.

Another option against data poisoning is to use machine learning techniques to generate data with various degrees of pollution to measure the amount of data poisoning, in case of existence.

Robustness against adversarial examples

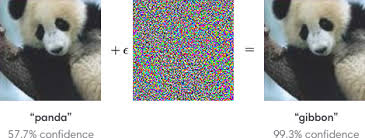

Adversarial examples are datasets that have been created to maximally confuse the model. For instance, in the image below, the human eye sees a panda in both pictures, with no differences between both images. However, for a computer, the second image that was created in an algorithmic fashion is clearly a gibbon. This distinction is made after an adversarial attack was added to the first image (on the left).

Robustness of an AI system incorporates technical robustness as well as its robustness from a social perspective.

With the creation of such samples with machine learning techniques, you will be able to compare performance loss with other models. Typically, simple models, for instance largely linear and not many features, are more robust against adversarial examples.

Requirement 3: Privacy and data governance

Privacy is a fundamental right affected by AI systems, linked to the principle of prevention of harm. For this, there is a need for adequate data governance.

Within Chiron, you are able to govern your data by using a distributed data lake that allows users to centralize, version and clean all data needed for the model validation phase. Moreover, you can create reproducible model documentation as well as validation reports.

This covers the quality and integrity of the data used in the models as well as its relevance which the AI systems will be deployed in: its access protocols and the ability to process the data while protecting its privacy.

The two questions asked regarding privacy in the assessment list are:

– “Did you consider the impact of the AI system on the right to privacy, the right to physical, mental and/or moral integrity and the right to data protection?”

– “Depending on the use case, did you establish mechanisms that allow flagging issues related to privacy concerning the AI system?”

Measurement of disclosure risk

To assure that the data in your AI systems is protected on the right of privacy, we suggest the measurement of the disclosure risk.

According to Springer Link, disclosure risk can be defined “as the risk that a user or an intruder can use the protected dataset”. This can be achieved by the fraction of uniquely identifiable samples, given the value of a set of attributes.

There are three levels of disclosure risk to look out for:

1. Attribute disclosure, which happens when an individual’s attribute can be determined more precisely with access to the released statistic, instead of without, e.g. HIV status.

2. Identity disclosure, which happens when you are able to link a record in the protected data to a respondent’s identity. When merging an anonymized dataset with another one, you are able to discover who the individual is, anuling the principle of privacy.

3. Inferential disclosure occurs when released data makes it easier to determine a characteristic of a subject, through links to external information. For instance, Facebook profiles.

To measure the disclosure risk, there are three different ways:

1. Probabilistic record linkage

2. Distance-based record linkage

3. Interval disclosure

With Chiron, you can keep track of the links between data, analytics, model versions and reports, making this task easier.

Differential privacy

Another popular solution to assure privacy is to use differential privacy.

Differential privacy is a system, outside of Chiron, where you can publicly share information regarding a dataset. This can be done by describing the patterns of groups within datasets while omitting details about the people in the database.

A simple example of differential privacy is the following:

You are managing a sensitive database and would like to release statistics from this database to the public. Yet, you need to first ensure that it is not possible for an adversary – a party with the intent to reveal or learn, at least some of your sensitive data – to reverse-engineer the sensitive data that you have released.

Differential privacy can solve the problem when sensitive data, curators who need to release statistics and adversaries who want to recover their sensitive data are present. This reverse-engineering is a type of privacy breach.

When making a random substitution in the database, the effect is expected to be small enough that the result cannot be used to infer about any person, hence privacy is provided.

Requirement 4: Transparency

Traceability, explainability and open communication about the limitations of the AI system are crucial transparency components for achieving trustworthy AI.

In order to make sure that everyone understands the limitations of an algorithm, we need the tools to communicate clearly to both technical and non-technical teams. Standardized summary reports such as Model cards are interesting initiatives for practitioners.

Regarding the communication to non-technical users, a translation layer is needed. To make sure that model decisions are properly understood, there are three large families of solutions to this problem:

– Explainable AI

– Local explainability techniques

– Global explainability techniques

Explainable AI is an artificial intelligence that can be understood by humans. These AI techniques have explainability embedded. An example of this is a shallow decision tree, where you can easily understand why such a tree comes to a certain conclusion.

Local explainability techniques explain a single AI decision by focusing on a set of similar samples and simplify the algorithm in that neighbourhood. Local explanations are relevant for small environments or for specific instances.

These are mostly used by machine learning engineers and data scientists when auditing models and before deploying the models, instead of explaining it to the end-users.

At last, global explainability techniques try to capture the global behaviour of the model, taking an holistic approach by, for instance, displaying the response of the algorithm against a pair of variables.

At Yields we value transparency. With Chiron, you can interact with BPMN engines, allowing you to increase the efficiency and transparency of your model risk management. BPMN is a standard language that can be used to interact between the various teams through complex business processes.

Requirement 5: Diversity, non-discrimination and fairness

To achieve trustworthy AI, you must eliminate bias from your algorithms, in the collection phase, where possible. AI systems should be user-centric, regardless of their age, gender, education, experience, disabilities or characteristics.

A well-known example regarding bias comes from the e-commerce company, Amazon. They created an AI platform to recruit new developers and the algorithm developed gender bias. This happened as historically, most developers were men, and the training dataset was therefore highly imbalanced. As a consequence, the AI platform incorrectly inferred that being a woman meant that the person would not be fit for a developer job.

As explained in our previous article “how to fix bias in machine learning algorithms?”, there are three possible answers for this question: unawareness, demography parity and equalized odds or opportunities.

Unawareness happens when the protected attribute is eliminated. A protected attribute is a data attribute against which the model needs to be fair, for instance, the language that you speak, your gender, and so on. By removing the attribute, we expect the model to develop sensitivity against the protected attribute. However, because of spurious correlation, it might be possible to reverse engineer the value of the protected attribute.

A second approach is “demography parity”. For this, you require the output of the models to be the same, regardless of the value of the protected attribute. In some cases, this is not considered fair as the individual’s characteristics differ. For instance, if we were to introduce demographic parity in sports, we would have a single world record for the 100m sprint which is the same for men and women.

This is the reason why, very often, the third approach, “equalized odds” is chosen.

Equalized odds imply that the forecasting error is independent of the protected attribute, hence, the model has the same prediction error for different protected classes.

Conclusion

Many organizations are in the process of deploying AI applications into production. How to make sure that the algorithms are trustworthy is a fundamental concern for the various institutions.

As it was shown throughout the article, with Chiron, you can achieve Trustworthy AI. With Chiron, you can automate repetitive tasks allowing you to focus on your SMEs tasks and eases the process of collaboration between the three lines of defence as it covers the complete process of the model lifecycle.