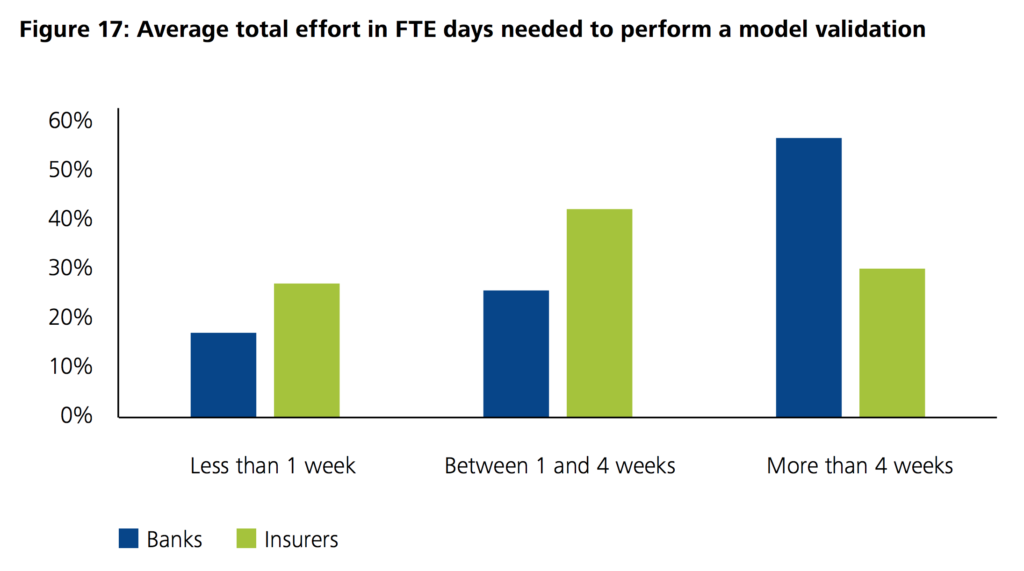

Model validation is a labour-intensive profession that requires specialists who understand both quantitative finance as well as business practices. Validating a single valuation model typically requires between 3 and 6 weeks of hard work focusing on many different topics. In this first article, I would like to highlight a few ideas on how to automate such analyses.

Data

Models need data. Hence, a large portion of time in model validation is spent on managing data. To give a few examples, the validator has to make sure that the input data is of good quality, that the test data is sufficiently rich and that there are processes in place to deal with data issues. Broadly speaking, input data exists in two flavours: time series and multi-dimensional data that is not indexed primarily by time.

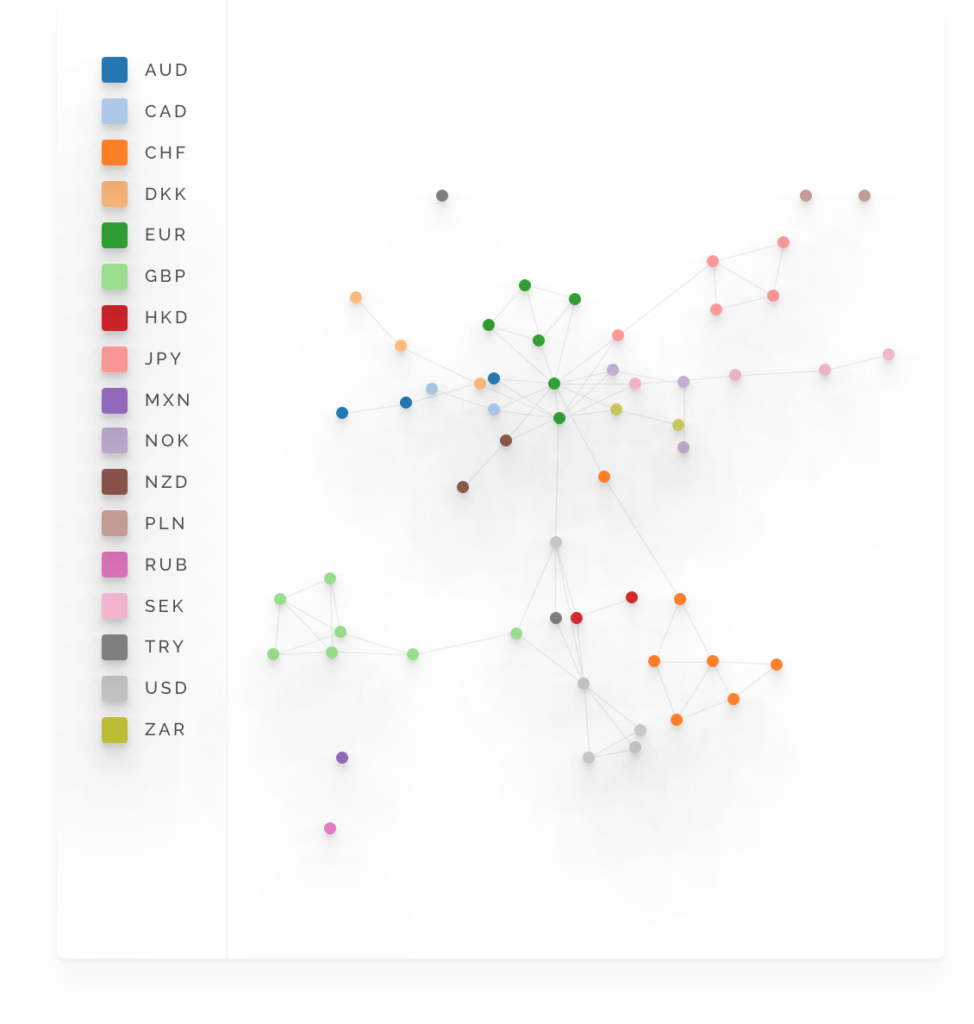

Model dependencies

A model inventory is a necessary tool in a model governance process. On top of keeping track of all the models used, it is extremely valuable to also store the dependencies between models. For instance, when computing VaR one need the historical PnL of all portfolios. If these portfolios contain derivatives, we need models to compute their net present value. All the models that are used within an enterprise can be represented as a graph where the nodes are the models while the vertices represent data. Understanding the topology makes it easier to trace back model issues.

Benchmarking

ML can help benchmarking models. First of all, in the case of forecasting models, we can easily train alternative algorithms on the realizations. This will help you to understand the impact of changing the underlying assumptions of the model.

However, even in the case, such realizations are not available, we can still train a model to mimic the algorithm that is used in production. We can use such surrogate models (see e.g. this paper) to detect changes in the behaviour. As an example, suppose we are monitoring an XVA model. We can train an ML algorithm to predict the changes in the XVA amounts of a portfolio when the market data changes. We can still build a model that tries to forecast the change in PnL with market data. Such a model can be used to detect e.g. instabilities in the XVA computation. An additional benefit of such an approach is that one can estimate the sensitivity from the calibrated model.