Why False Positives Occur

Let’s start by first understanding why a state-of-the-art feature like crash detection would mistakenly perceive dance moves as a car crash.

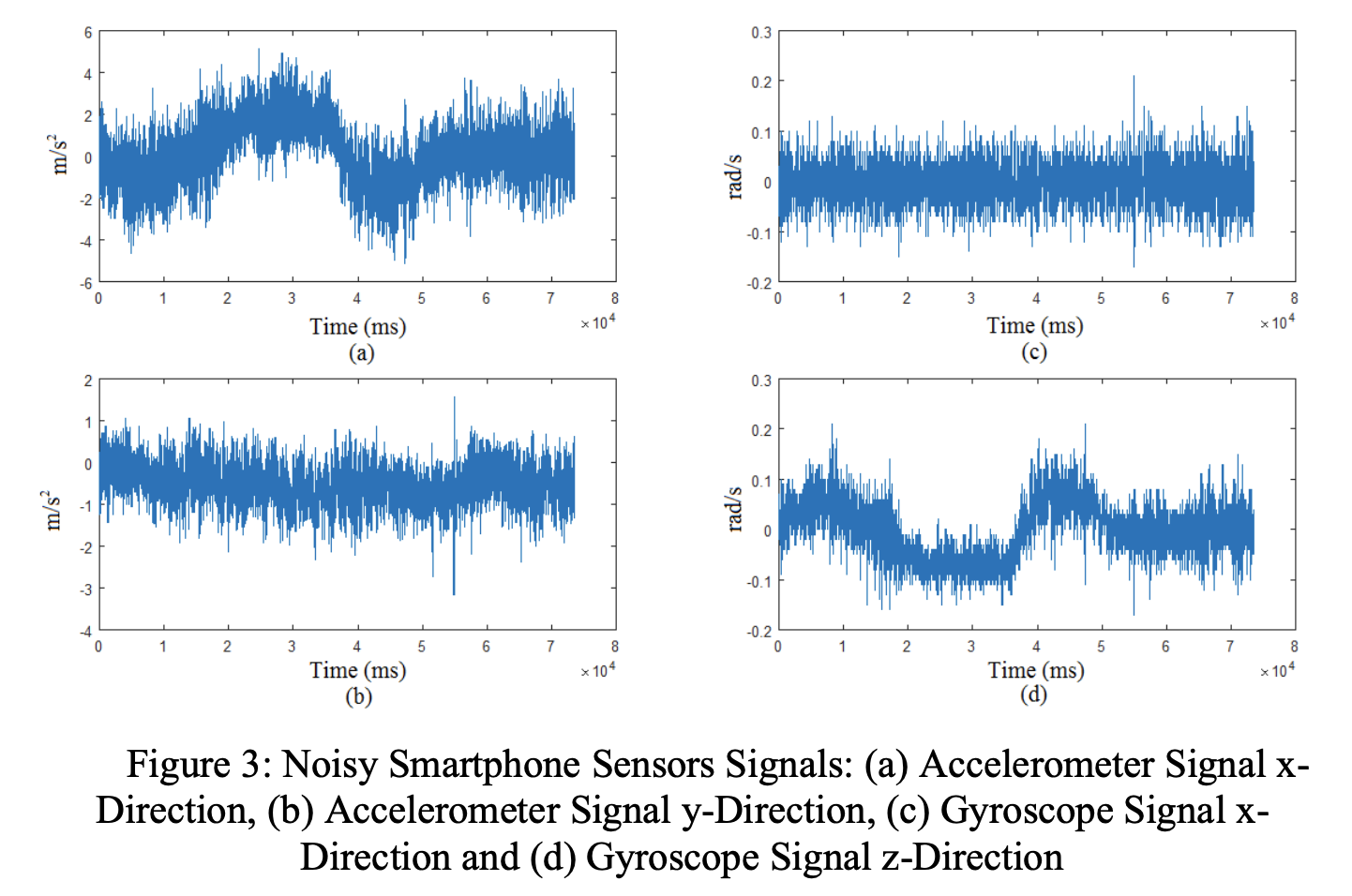

Sensor Data NoiseIllustrative sensory noise taken from M.S.F Al-Din, “Real-Time Identification and Classification of Driving Maneuvers using Smartphone”.

Crash detection in smart phones uses machine learning models that feed on various sensory data from accelerometers, gyroscopes, microphones etc. Although accelerometers in smartphones are generally accurate, all sensory data is subject to noise. (Moreover, accelerometers can even be fooled by sound waves.) and such noise always leads to decreased accuracy. In other words, the same shaking motion that indicates a crash can be mimicked by various high-intensity activities – from skiing down a mountain to riding a rollercoaster, and apparently, dancing at Bonnaroo!

Binary Classification Algorithms

Crash detection functions as a binary classifier, meaning that it discerns between two states: crash or no crash. Typically, such classifiers output a probability that the input belongs to either class. The most important parameter of the model is therefore the threshold probability above which one will classify the given input signal as a crash. The optimal value of this threshold should strike a balance between detecting actual crashes while not flooding the emergency services with accidental calls. The stakes can hardly be higher.

Building a robust AI solution

Sound model risk management (MRM) practices can help in harmonising the apparent opposite interests of various groups and lead the path to more robust models. In the case at hand, there are three MRM principles that stand out.

Independent Model Review

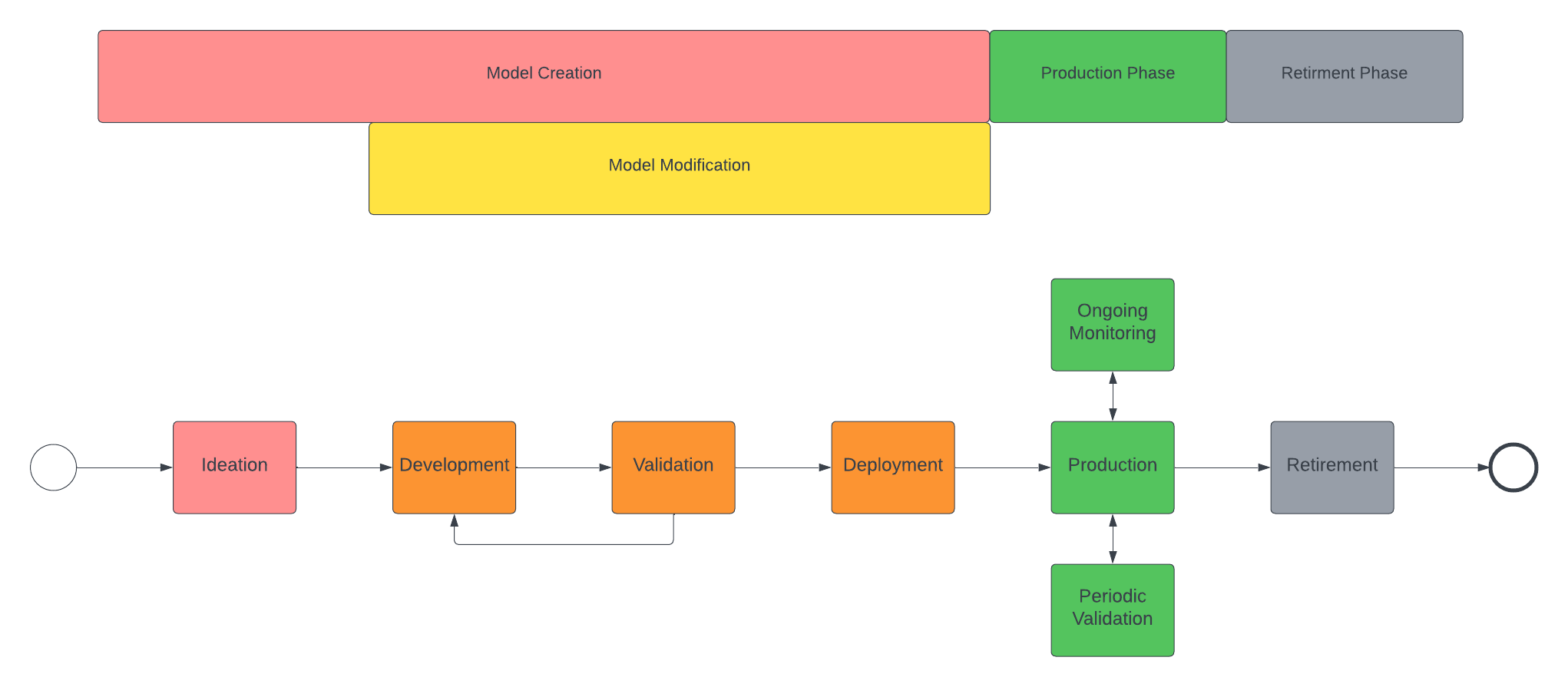

The model lifecycle process, as described in the Yields best-practice MRM framework.

As already highlighted in another post where we analysed the moon landing failure of ispace, a model can only be deployed in production after it has been independently reviewed. Such a validation can offer fresh perspectives. Validators, equipped with a neutral vantage point, might for instance recognize the potential of integrating additional data sources. For instance, geolocation data could indicate the likelihood of a crash. It’s highly improbable for a car accident to occur in front of the Bonnaroo festival stage.

Inclusive Decision Making

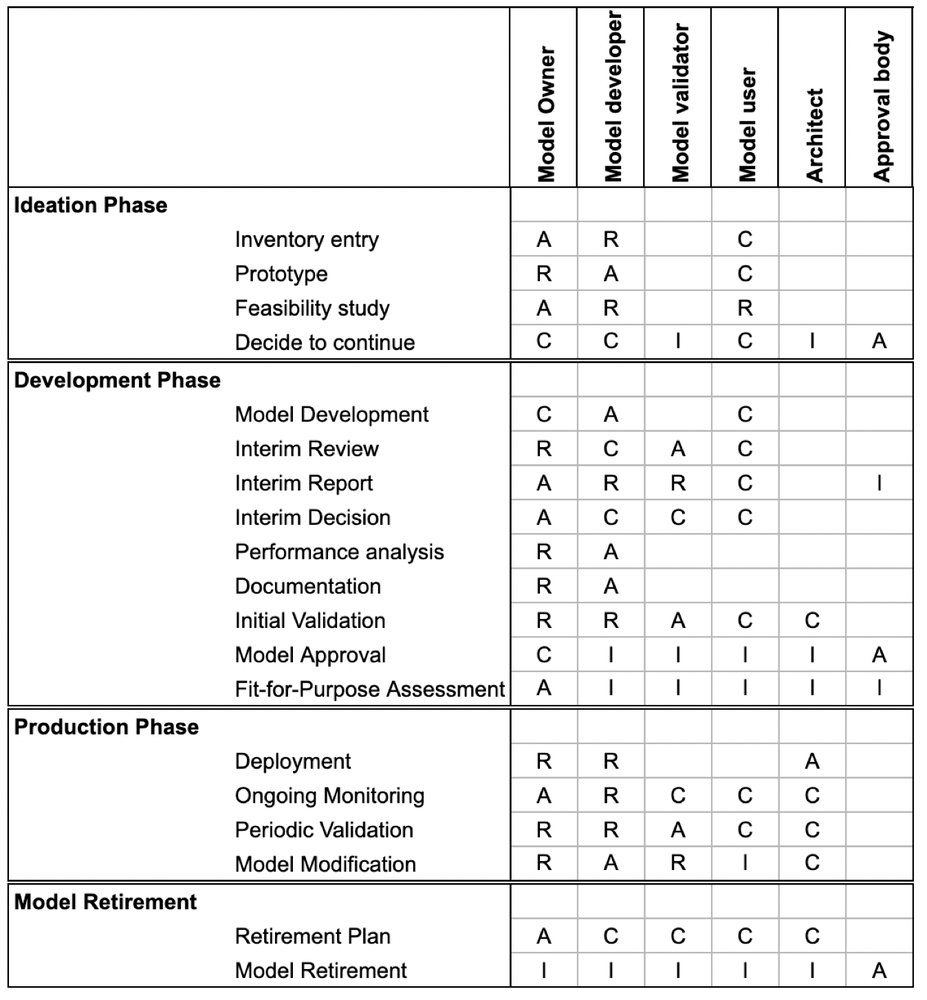

RACI matrix highlighting the importance of the approval body throughout the model lifecycle, taken from the Yields best practice framework.

Striking the delicate balance between saving lives and accidental calls requires comprehensive stakeholder involvement. This isn’t just a matter for tech developers or business strategists. It necessitates collaboration between vendors (like Apple), users, and critical services like 911 operators. Their collective insights can ensure that the technology serves its purpose without inadvertently causing strain on emergency services. This type of stakeholder involvement is built in model risk management by leveraging a so-called Approval Body that has to decide whether a model can be released. That Approval Body has to be representative of all teams that are impacted by the model.