The impact of badly governed AI

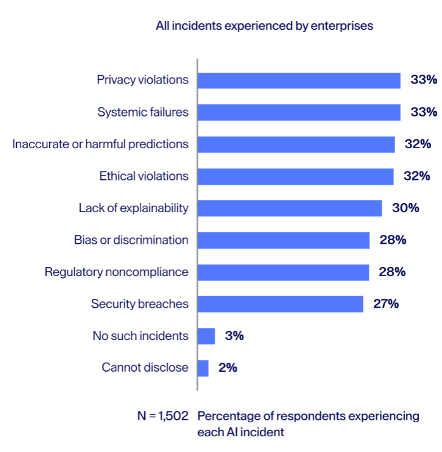

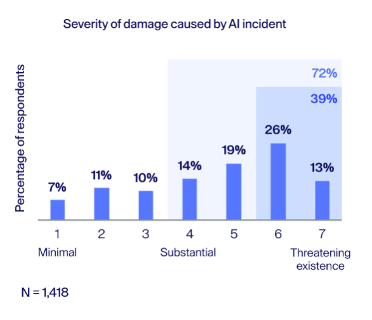

AI-related incidents are no longer hypothetical. 95% of companies have experienced at least one AI incident, and in 39% of cases the damage was severe or extremely severe. (Source: Infosys)

The consequences are tangible and costly:

- Reputation damage

- Financial losses, with an average loss of $800.000 over two years

What is AI governance and why do we need it?

Artificial Intelligence is reshaping how organizations operate, from automating customer interactions to powering strategic decisions and product features. But with this transformative power comes complexity and risk: untracked models, inconsistent controls, unclear ownership, and growing regulatory expectations.

AI governance is the framework that brings structure and oversight to how AI is used across an organization. It gives teams the ability to inventory AI systems, identify and manage risks, and demonstrate compliance with evolving standards, all without slowing down innovation. Rather than relying on spreadsheets, manual processes, or guesswork, AI governance centralizes oversight so companies can scale AI responsibly and confidently.

Based on real-world incidents and industry examples, these are the five hidden truths about AI governance every organization should understand.

1. Governance enables trust, not excessive control

AI governance is often perceived as restrictive. In practice, it can be a powerful competitive advantage.

A strong example is Apple Intelligence. The challenge was clear: integrating large language models with personal data without compromising privacy. The solution was Private Cloud Compute (PCC), governance enforced directly through architecture.

By embedding privacy safeguards into the system design, such as controlled decryption processes, Apple enabled users to safely share sensitive data.

Result: privacy-by-design turns governance into a competitive differentiator.

2. AI is used everywhere but responsibilities are not always clear

As AI adoption accelerates, organizations face a new challenge. Not how to build or buy AI, but how to clearly assign responsibility when things go wrong or when oversight is required. Some examples:

The Shadow AI Problem

Employees increasingly use unsanctioned LLMs to process sensitive data, bypassing internal controls entirely.

Vendor vs. User

When a third-party AI system produces biased or harmful results, responsibility is often unclear. Is it the vendor, the developer, or the organization deploying it?

The “Human-in-the-loop” Myth

While organizations claim to have human-in-the-loop controls, these humans often act as passive approvers rather than active decision-makers.

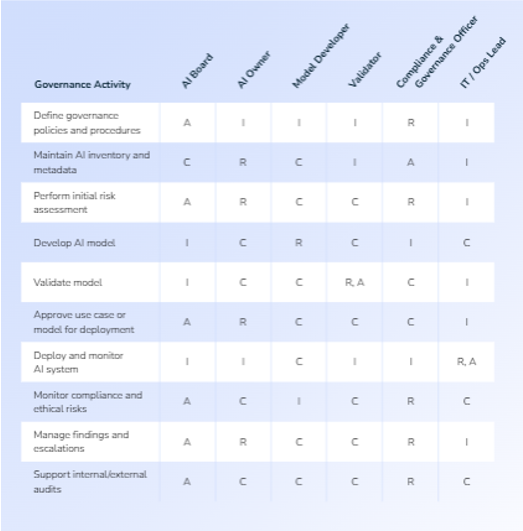

The way forward: Defining a RACI matrix specifically for AI life cycles

Clarifying who is Responsible (R), Accountable (A), Consulted (C), and Informed (I) creates clarity from development to deployment and beyond.

Example of a RACI

We´ve created an example of a RACI, which is available in our eBook ‘Managing AI Risk’. Download it now!

03. Risk management is superior to regulatory compliance

AI Governance goes beyond compliance. AI risks do not map one-to-one with regulatory classifications such as the EU AI Act’s “high-risk” category. Several high-impact incidents illustrate this gap:

Air Canada Chatbot

A customer service chatbot provided incorrect information, leading to financial compensation and reputational damage.

Under the EU AI Act: these types of chatbots are not explicitly classified as high-risk.

In practice: the incident caused tangible financial, legal, and trust impact.

Meta AI chatbots

Chatbots provided false medical advice and engaged in inappropriate interactions with minors.

Under the EU AI Act: general-purpose chatbots are not high-risk by default.

In practice: severe ethical, safety, and trust failures occurred.

HR using sensitive data in ChatGPT

HR teams used general-purpose LLMs to process sensitive personal data.

Under the EU AI Act: the tool itself is not necessarily high-risk.

In practice: the use case introduces significant privacy, data protection, and organizational risk.

The conclusion is unavoidable: Compliance with AI regulation does not guarantee safety, ethics, or trust. All AI systems require governance proportional to their real-world impact, not just their regulatory label.

Effective governance embeds risk management across the full AI lifecycle:

Discover

AI inventory and

classification

- Identify all AI systems in use

- Classify risks from the start

Design

Policies and

controls

- Embed governance into design

- Define roles, policies, safeguards

Deploy

Risk mitigation

in operation

- Implement technical and human-in-loop controls

- Ensure compliance at launch

Monitor

Continuous compliance

and audit

- Ongoing monitoring and alerts

- Automated reporting and audit trails

Being compliant isn’t the same as being safe. Thinking fast gives comfort. Thinking slow reveals the truth.

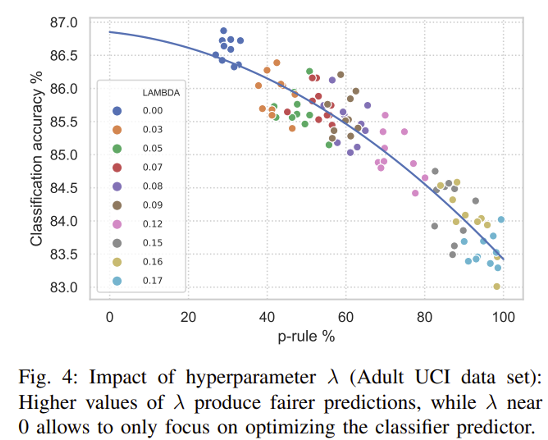

04. Fairness and performance: a false dilemma

Teams often experience fairness as a trade-off. As soon as fairness measures are introduced, model accuracy seems to drop. This leads to a familiar concern: are we sacrificing performance to be fair?

Consider an AI system used to screen job applications. The model is trained on historical hiring data and optimized for accuracy. Overall, it performs well. Most predictions are correct, and the accuracy score looks strong.

However, a closer look reveals a problem. The model makes more mistakes for certain groups, such as women or candidates from underrepresented backgrounds. This is not because the model is broken, but because historical data reflects past hiring patterns. By optimizing purely for accuracy, the model reproduces and amplifies those patterns.

When fairness constraints are introduced, the model is trained to reduce these disparities. The result is a small drop in the original accuracy score. On paper, this looks like worse performance. In reality, the model is no longer allowed to perform well by systematically disfavouring specific groups.

This is the key insight: fairness does not reduce performance. It changes how performance is measured.

Good AI governance makes this explicit. It defines which fairness metrics matter, which trade-offs are acceptable, and who is accountable for those decisions. Instead of treating fairness as an afterthought, governance ensures it is built into the system from the start and can be explained, reviewed, and audited over time.

Being accurate is important. Being accountable is what makes AI trustworthy.

05. AI governance is often treated as a checkbox exercise. It’s actually an engineering job

Many organizations treat governance as a checkbox exercise. But managing AI risk is closer to engineering quality and reliability than filling in forms.

An example is the iSpace lunar lander crash. The Lunar lander from iSpace ran out of fuel and crashed on the moon. The fix required automated testing of sensor behavior for each decision cycle, supported by digital twins. The suspected cause was a potential error during installation and/or testing of the laser rangefinder or deterioration of its performance during flight.

AI systems need that same mindset: continuous testing, controls that work in production, and monitoring that catches failure modes before they become incidents.

Author

Efrem Bonfiglioli is a seasoned model and AI risk management professional with a passion for advising model developers and validators on best practices for effective model and AI use case management.He has held various roles related to model risk management across multiple lines of defense in leading global banking institutions, covering a wide range of asset classes and risk types. Efrem is a visiting professor at universities in Italy and the UK where he teaches courses ranging from foundational financial subjects to advanced quantitative modelling.He earned his PhD in Financial Mathematics, where he focused on researching the applications of jump-diffusion models in the context of derivatives pricing.